jinjorge

Members-

Posts

74 -

Joined

-

Last visited

Converted

-

Gender

Undisclosed

jinjorge's Achievements

Rookie (2/14)

0

Reputation

-

@Squid There's a bug at https://github.com/Squidly271/fix.common.problems/blob/77d344ce93955e8efbc9560b1e1f14c9ab3e3e90/source/fix.common.problems/usr/local/emhttp/plugins/fix.common.problems/include/tests.php#L378 Need a period/full stop and a space btwn `questionBegin Investigation Here` Would you like me to submit a PR with a fix?

-

@johnnie.blackThank you very much!!

-

I've observed this for all shares. most active shares are Movies, TV Shows and Time Mach(Time Machine backups) attached the diagnostics zip. Thank you for your help. solamente-diagnostics-20181016-1017.zip

-

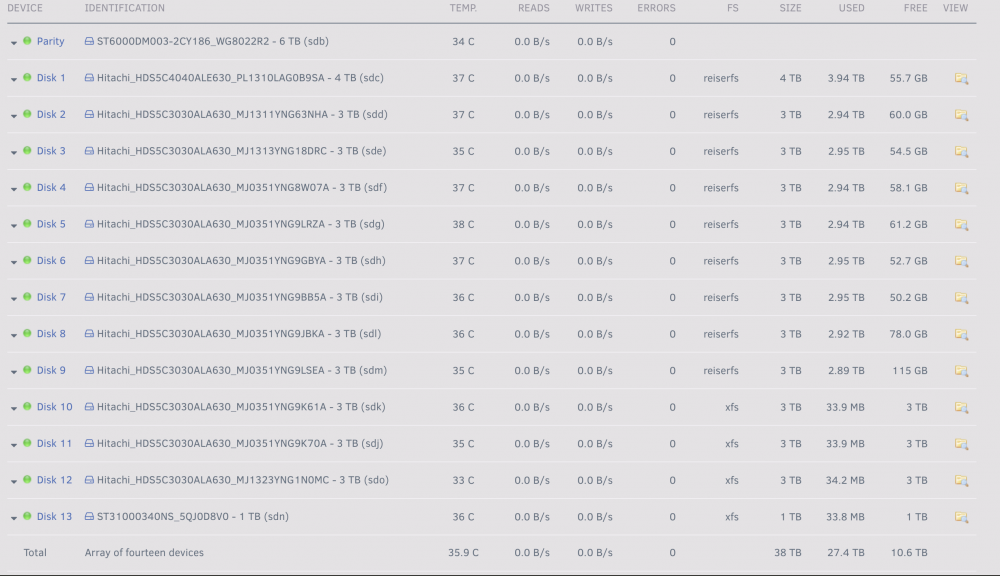

I've been experiencing this issue for quite some time and is not new to v6.6.1. I have an array with 1 parity drive, 13 storage drives. Of the 13 storage drive, 4 are XFS formatted. These 4 XFS formatted drives are virtually empty where the other 9 reiserfs formatted drives are for the most part 95%+ full. Am I missing a basic config that's preventing data from being written to the XFS formatted drives?

-

I think your steps at a high level are correct. The pedantic me has the steps as follows 1. Stop array 2. Power down unit 3. Replace 2TB drive with new 4TB drive 4. Power on unit - it will not automatic start as it will detect a change in drives 5. Preclear the new 4TB drive 6. When the preclear completes, assign the 4TB drive as the new parity drive 7. Start the array - which should start a rebuild Hope that's helpful

-

jinjorge started following unRAID Server Release 5.0 i386 Available , unRAID Server Version 6.2.2 Available , unRAID Server Release 6.1.5 Available and 7 others

-

I run v2.2 of the tunable script on my server with unRAID 6.2 before I noticed all the work @pauven and team did in August/September timeframe. any plans to release v4.0 of the script? One suggestion for this project to hopefully lighten the load on @pauven is to put the project on GitHub or your favorite open SCM and we can all contribute to it. Thoughts?

-

???

-

updated with no issue. Parity check scheduled to run in about an hour. J

-

Can you clarify why you thought the disks never spun up for the parity check? Like itimpi, I believe they did spin up and the parity check was running, but the display was not updated yet. There are a number of cases where the displayed spin state and the true spin state become out of sync, and I suspect that with this release there are even more cases now. One small change that would help is keeping the display of reads and writes updated. Since they are 'in memory' quantities, accessing them shouldn't affect anything. And any change in them could trigger a re-sync of spin state (and temps display). And a user could see from the reads and writes that the drive IS spinning, active, and know to wait for the spin state to be updated too. In my case I don't think the disks were spun up because the time to complete the parity check kept going up and that's what made me stop the check. From what I can recall, I saw 359 days, then jumped up to 4000+ days < yup, crazy!!

-

Let me know what you find. Thanks!!

-

Installed v6.1.2 via the UI update option. After the rebooting, and not realizing that all disks had been spun down, I clicked on "Check Parity" button and the disks never spun back up. I had to cancel the parity check, spin up the drives then restart the parity check. Sep 9 21:47:19 solamente avahi-daemon[2883]: Service "solamente-AFP" (/services/afp.service) successfully established. Sep 9 22:02:16 solamente kernel: mdcmd (57): spindown 10 Sep 9 22:02:17 solamente kernel: mdcmd (58): spindown 11 Sep 9 22:02:18 solamente kernel: mdcmd (59): spindown 12 Sep 9 22:02:20 solamente kernel: mdcmd (60): spindown 0 Sep 9 22:02:21 solamente kernel: mdcmd (61): spindown 1 Sep 9 22:02:22 solamente kernel: mdcmd (62): spindown 2 Sep 9 22:02:22 solamente kernel: mdcmd (63): spindown 3 Sep 9 22:02:23 solamente kernel: mdcmd (64): spindown 4 Sep 9 22:02:24 solamente kernel: mdcmd (65): spindown 5 Sep 9 22:02:24 solamente kernel: mdcmd (66): spindown 6 Sep 9 22:02:25 solamente kernel: mdcmd (67): spindown 7 Sep 9 22:02:26 solamente kernel: mdcmd (68): spindown 8 Sep 9 22:02:26 solamente kernel: mdcmd (69): spindown 9 Sep 9 22:02:27 solamente kernel: mdcmd (70): spindown 13 Sep 9 22:31:18 solamente kernel: mdcmd (71): check CORRECT Sep 9 22:31:18 solamente kernel: md: recovery thread woken up ... Sep 9 22:31:18 solamente kernel: md: recovery thread checking parity... Sep 9 22:31:18 solamente kernel: md: using 1536k window, over a total of 3907018532 blocks. Sep 9 22:33:12 solamente kernel: mdcmd (72): nocheck Sep 9 22:33:53 solamente kernel: md: md_do_sync: got signal, exit... Sep 9 22:33:54 solamente kernel: md: recovery thread sync completion status: -4 Sep 9 22:34:57 solamente emhttp: Spinning up all drives... Sep 9 22:34:57 solamente kernel: mdcmd (73): spinup 0 Sep 9 22:34:57 solamente kernel: mdcmd (74): spinup 1 Sep 9 22:34:57 solamente kernel: mdcmd (75): spinup 2 Sep 9 22:34:57 solamente kernel: mdcmd (76): spinup 3 Sep 9 22:34:57 solamente kernel: mdcmd (77): spinup 4 Sep 9 22:34:57 solamente kernel: mdcmd (78): spinup 5 Sep 9 22:34:57 solamente kernel: mdcmd (79): spinup 6 Sep 9 22:34:57 solamente kernel: mdcmd (80): spinup 7 Sep 9 22:34:57 solamente kernel: mdcmd (81): spinup 8 Sep 9 22:34:57 solamente kernel: mdcmd (82): spinup 9 Sep 9 22:34:57 solamente kernel: mdcmd (83): spinup 10 Sep 9 22:34:57 solamente kernel: mdcmd (84): spinup 11 Sep 9 22:34:57 solamente kernel: mdcmd (85): spinup 12 Sep 9 22:34:57 solamente kernel: mdcmd (86): spinup 13 Sep 9 22:35:09 solamente kernel: mdcmd (87): check CORRECT Sep 9 22:35:09 solamente kernel: md: recovery thread woken up ... Sep 9 22:35:09 solamente kernel: md: recovery thread checking parity... Sep 9 22:35:09 solamente kernel: md: using 1536k window, over a total of 3907018532 blocks. Sep 9 22:36:16 solamente emhttp: cmd: /usr/local/emhttp/plugins/dynamix/scripts/tail_log syslog

-

Congrats to the whole team on the release of v6.0.0. Updated last night from the last 6rc. Was still performing a parity check when I checked this morning. I saw the chatter about the banner which I guess at some point had turned off. Turned it on - I like it J

-

unRAID Server Release 6.0-beta15-x86_64 Available

jinjorge replied to limetech's topic in Announcements

upgraded via the plugin manager from b12 to b15. GUI is amazing, snappy. Read through all 19 pages of this thread - Things are pointing in the right direction. Great work everyone!!