-

Posts

246 -

Joined

-

Last visited

kode54's Achievements

Explorer (4/14)

27

Reputation

-

kode54 started following ZFS plugin for unRAID , Docker won't start after downgrading from 6.3.0-rc5 to 6.2.4 , [Request] Aria2 container(s) and 2 others

-

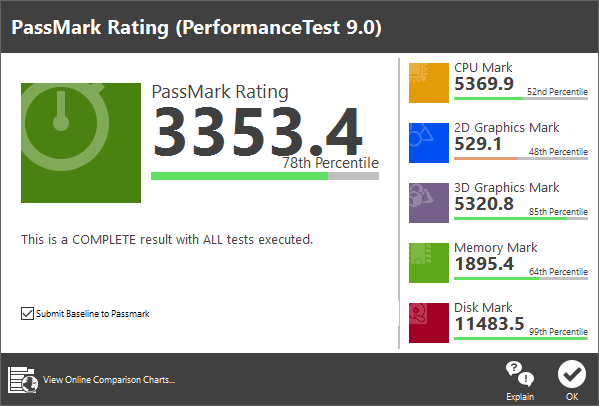

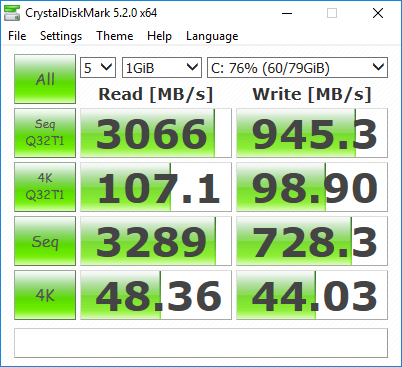

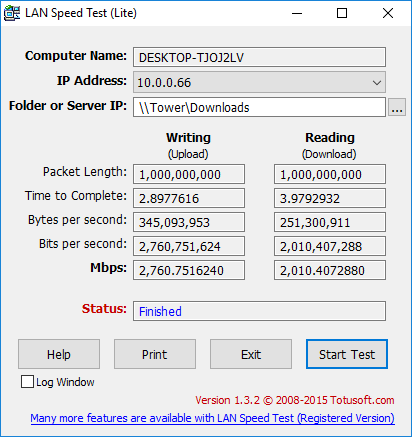

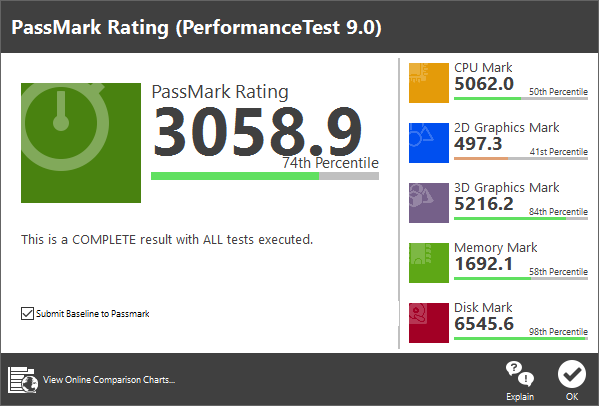

Bumping to prove that under certain conditions, great things could happen for no apparent reason, other than hopefully more optimal configuration settings. Upon adding to my domain tag: <domain type='kvm' xmlns:qemu='http://libvirt.org/schemas/domain/qemu/1.0'> And adding the following override to the -cpu switch to the end, just before the end of the domain tag: <qemu:commandline> <qemu:arg value='-cpu'/> <qemu:arg value='host,kvm=off,hv_relaxed,hv_spinlocks=0x1fff,hv_vapic,hv_time,hv_vendor_id=Microsoft'/> </qemu:commandline> I was able to eke out some more performance, by turning on the hypervisor enlightenments, without tripping the Nvidia drivers. Doing it this way requires qemu version 2.5.0. It is possible to pass that vendor_id argument through the features->hyperv block, but only in libvirt 1.3.3 or newer, or possibly 2.1.0. On the left, my first post here. On the right, my most recent benchmark of the same VM setup, only with the enlightenments enabled. Again, it's still possible to trick Nvidia's drivers, by faking the vendor_id of the hypervisor, which they specifically blacklist. I chose Microsoft for the lulz.

-

Bumping this old topic to get it some more attention. Especially since a branch with Nexenta ZFS based TRIM support is waiting to be accepted into the main line. I for one would love to see ZFS support replace BTRFS use. Create n-drive zpool based on the current cache drive setup, and create specialized and quota limited ZFS datasets for the Docker and libvirt configuration mount points. Yes, Docker supports ZFS. And from what I've seen, one only wants to stick with BTRFS on a system they're ready to nuke at a moment's notice.

-

There is a trick to switching over: 1) Add another hard drive, maybe 1MB or slightly larger, and make it VirtIO. 2) Boot the VM. 3) Install the viostor drivers for your OS to support the tiny image you mounted above. 4) Shut down the VM. 5) Delete the mini temporary VirtIO drive. 6) Change your boot image to VirtIO. 7) It should boot fine now.

-

Pass through PCIe sound card for docker or VM..?

kode54 replied to xthursdayx's topic in General Support

To install the driver in unRAID, he'd need a version of ALSA built for the correct kernel. -

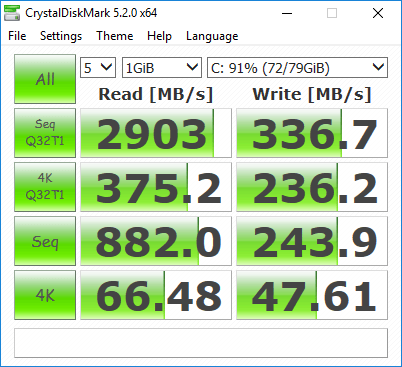

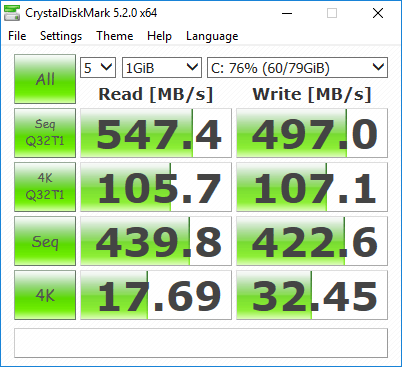

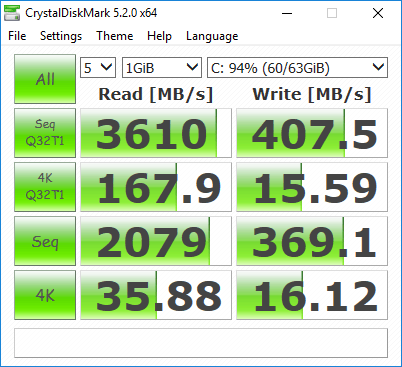

Updated CrystalDiskMark shots, with a new VM backed on an XFS cache drive. First one's using cache='none' io='native': Second one's using the defaults that always get overwritten by the template editor, cache='writeback', and no io parameter: The io=native mode appears to reflect the actual drive performance, with the overhead of XFS and virtualization factored in.

-

Wtf? Dec 12 18:23:50 unraid root: plugin: running: /boot/packages/python-2.7.5-x86_64-1.txz Dec 12 18:23:50 unraid root: Dec 12 18:23:50 unraid root: +============================================================================== Dec 12 18:23:50 unraid root: | Upgrading python-2.7.9-x86_64-1 package using /boot/packages/python-2.7.5-x86_64-1.txz Dec 12 18:23:50 unraid root: +============================================================================== Dec 12 18:23:50 unraid root: Dec 12 18:23:50 unraid root: Pre-installing package python-2.7.5-x86_64-1... Dec 12 18:23:55 unraid root: Dec 12 18:23:55 unraid root: Removing package /var/log/packages/python-2.7.9-x86_64-1-upgraded-2016-12-12,18:23:50... Then after taking the array online and offline a few times: Dec 12 18:25:20 unraid emhttp: unclean shutdown detected Dec 12 18:35:19 unraid sudo: root : TTY=unknown ; PWD=/ ; USER=nobody ; COMMAND=/usr/bin/deluged -c /mnt/cache/deluge -l /mnt/cache/deluge/deluged.log -P /var/run/deluged/deluged.pid I see you are using a very messy plugin-based Deluge setup that plonks conflicting versions of Python on the system, first 2.7.9, then 2.7.5 replaces 2.7.9 like it's a newer version. And lots of Python libraries. And ZIP and RAR and Par2 and yenc utilities. And then the last thing it does at the end is start deluged, which may or may not be hanging the httpd because it never returns. Maybe try finding a Docker package that does everything you want? E: I also see you've got a weird frankenstein mix of Reiser and XFS partitions, too. Yikes.

-

Windows 10 VM "native" resolution stucked to 800x600 on VNC mode

kode54 replied to donativo's topic in VM Engine (KVM)

6.3.0-rc already knows about newer virtio iso downloads. Presumably, none of these were added to 6.2 newer releases because they were presumed to be incompatible with the older Qemu? -

unRAID 6 NerdPack - CLI tools (iftop, iotop, screen, kbd, etc.)

kode54 replied to jonp's topic in Plugin Support

Proposing the inclusion of the atop package from Slackbuilds, for moments where it may be useful to monitor which resources may be maxing out in a system. It will handily display color coded load percentages for memory and disks, and blink a status line red if it's being maxed out. May be useful in tracking down overburdening issues some people are experiencing. -

It is possible if you use virt-manager to configure your VMs. But you won't be able to connect to them from the WebUI, since there is no web SPICE client in unRAID. There are already performance issues with the stock noVNC client, so you may experience better performance if you use a native client.

-

Try deleting network.cfg, reboot, and reconfigure your network changes from scratch? Fixed my Docker issues from downgrading, may fix your Samba issues from upgrading. Maybe also keep a backup copy, see how the two differ between your original and the recreated version.

-

Found the Dockerfile. Which imports from this base image. Looks like you just mount anything you want to be accessible to the image. A simple start is to mount /mnt as /mnt, read-only. Then you forward any port(s) you want to access to whatever public ports you want to access them on. The base image exposes an HTTPd with web RDP client on port 8080, and a direct RDP service on port 3389.

-

My results are also somewhat different. I've also taken to isolcpus and using only two cores / four threads for the virtual machine. I also have the two disk images hosted on an lz4 compressed ZFS pool on my SSDs. <domain type='kvm' id='1'> <name>Windows 10</name> <uuid>xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx</uuid> <metadata> <vmtemplate xmlns="unraid" name="Windows 10" icon="windows.png" os="windows10"/> </metadata> <memory unit='KiB'>8388608</memory> <currentMemory unit='KiB'>8388608</currentMemory> <memoryBacking> <nosharepages/> <locked/> </memoryBacking> <vcpu placement='static'>4</vcpu> <cputune> <vcpupin vcpu='0' cpuset='2'/> <vcpupin vcpu='1' cpuset='3'/> <vcpupin vcpu='2' cpuset='6'/> <vcpupin vcpu='3' cpuset='7'/> </cputune> <resource> <partition>/machine</partition> </resource> <os> <type arch='x86_64' machine='pc-i440fx-2.5'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi.fd</loader> <nvram>/etc/libvirt/qemu/nvram/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx_VARS-pure-efi.fd</nvram> </os> <features> <acpi/> <apic/> </features> <cpu mode='host-passthrough'> <topology sockets='1' cores='2' threads='2'/> </cpu> <clock offset='localtime'> <timer name='rtc' tickpolicy='catchup'/> <timer name='pit' tickpolicy='delay'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/local/sbin/qemu</emulator> <disk type='file' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source file='/mnt/zfs/domains/Windows 10/vdisk1.img'/> <backingStore/> <target dev='hdc' bus='virtio'/> <boot order='1'/> <alias name='virtio-disk2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/> </disk> <disk type='file' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source file='/mnt/zfs/domains/Windows 10/programming.img'/> <backingStore/> <target dev='hdd' bus='virtio'/> <alias name='virtio-disk3'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0'/> </disk> <disk type='file' device='cdrom'> <driver name='qemu' type='raw'/> <source file='/mnt/disk1/Purgatory/CD Images/vs2015.com_enu.iso'/> <backingStore/> <target dev='hda' bus='ide'/> <readonly/> <boot order='2'/> <alias name='ide0-0-0'/> <address type='drive' controller='0' bus='0' target='0' unit='0'/> </disk> <controller type='usb' index='0' model='nec-xhci'> <alias name='usb'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/> </controller> <controller type='pci' index='0' model='pci-root'> <alias name='pci.0'/> </controller> <controller type='ide' index='0'> <alias name='ide'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/> </controller> <controller type='virtio-serial' index='0'> <alias name='virtio-serial0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </controller> <interface type='bridge'> <mac address='xx:xx:xx:xx:xx:xx'/> <source bridge='br0'/> <target dev='vnet0'/> <model type='virtio'/> <alias name='net0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </interface> <serial type='pty'> <source path='/dev/pts/1'/> <target port='0'/> <alias name='serial0'/> </serial> <console type='pty' tty='/dev/pts/1'> <source path='/dev/pts/1'/> <target type='serial' port='0'/> <alias name='serial0'/> </console> <channel type='unix'> <source mode='bind' path='/var/lib/libvirt/qemu/channel/target/domain-Windows 10/org.qemu.guest_agent.0'/> <target type='virtio' name='org.qemu.guest_agent.0' state='connected'/> <alias name='channel0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x01' slot='0x00' function='0x0'/> </source> <alias name='hostdev0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x00' slot='0x1b' function='0x0'/> </source> <alias name='hostdev1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x08' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x01' slot='0x00' function='0x1'/> </source> <alias name='hostdev2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x09' function='0x0'/> </hostdev> <memballoon model='virtio'> <alias name='balloon0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x0a' function='0x0'/> </memballoon> </devices> </domain>

-

Quick add on scrapping the optical drives: I tend to keep exactly one optical drive around after I'm fully installed, with no <source> line, implying empty drive. This way, I can use virsh or virt-manager to hot mount images if necessary. You can't hot attach or detach drives, though.

-

Note that only works for snapshots taken while the VM is powered off. Live snapshots will require the memory and system state data from the /var/lib/libvirt/images directory. Of course, if you're using passthrough of anything but USB devices, you're already prevented from taking live snapshots.