yippy3000

Members-

Posts

75 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by yippy3000

-

[Support] Linuxserver.io - Unifi-Controller

yippy3000 replied to linuxserver.io's topic in Docker Containers

Seems like it was a corrupt DB. Don't know if it was related to the upgrade or not but the mongo DB got corrupt and restoring the app data folder from a backup fixed it. -

[Support] Linuxserver.io - Unifi-Controller

yippy3000 replied to linuxserver.io's topic in Docker Containers

After upgrading to Unraid 6.8 the controller suddenly doesn't work. It appears to start up just fine but I can't access the controller using an IP or via the cloud. The container logs look fine too. [s6-init] making user provided files available at /var/run/s6/etc...exited 0. [s6-init] ensuring user provided files have correct perms...exited 0. [fix-attrs.d] applying ownership & permissions fixes... [fix-attrs.d] done. [cont-init.d] executing container initialization scripts... [cont-init.d] 10-adduser: executing... ------------------------------------- _ () | | ___ _ __ | | / __| | | / \ | | \__ \ | | | () | |_| |___/ |_| \__/ Brought to you by linuxserver.io We gratefully accept donations at: https://www.linuxserver.io/donate/ ------------------------------------- GID/UID ------------------------------------- User uid: 99 User gid: 100 ------------------------------------- [cont-init.d] 10-adduser: exited 0. [cont-init.d] 20-config: executing... [cont-init.d] 20-config: exited 0. [cont-init.d] 30-keygen: executing... [cont-init.d] 30-keygen: exited 0. [cont-init.d] 99-custom-scripts: executing... [custom-init] no custom files found exiting... [cont-init.d] 99-custom-scripts: exited 0. [cont-init.d] done. [services.d] starting services [services.d] done. -

I am starting to get a very consistent error with one of my backup jobs to Backblaze where the CloudBerry process is crashing with the error "Plan process stopped with system error. Send logs to support" In the logs is 2019-07-18 09:32:53,762451 [INFO ]: [ CBB ] [ 7 ] End Listing : Status: QProcess::ExitStatus(CrashExit) code 11 2019-07-18 09:32:53,784091 [INFO ]: [ CBB ] [ 7 ] Update destination statistic, id: {fe9d330d-0d5f-4971-bdc4-31fcf9a5da66} CloudBerry support says "Usually that error occurs when open GUI or use web UI during the backup run. That is why as workaround I can only suggest not to open the GUI when the backup plan is running and let us know if that helps. " Is there any way to close or prevent the GUI from running to work around this?

-

+1 I have found some docker containers (https://hub.docker.com/r/netboxcommunity/netbox/) claim to require it so not having it keeps me from using it)

-

[Support] Djoss - CrashPlan PRO (aka CrashPlan for Small Business)

yippy3000 replied to Djoss's topic in Docker Containers

Followup. It seems that my connection issue is on the Crashplan side. Chat support said my server is having issues. Now, having issues for 5 days is... unacceptable, even for just a backup. -

[Support] Djoss - CrashPlan PRO (aka CrashPlan for Small Business)

yippy3000 replied to Djoss's topic in Docker Containers

Both. Currently the UI says "Unable to connect to destination" under the Crashplan Central backup set. App.log says it is trying to connect to dgc-sea.crashplan.com and is not getting a response. -

[Support] Djoss - CrashPlan PRO (aka CrashPlan for Small Business)

yippy3000 replied to Djoss's topic in Docker Containers

My container can't connect to Crashplan central for the last 4 days. Anyone else having this issue? -

There seems to be a missmatch on versions in the docker. Logging/statistics keep breaking/stopping and the fix is to delete the /etc/dnsmasq.d/01-pihole.conf and restart the container I figured this out from the developer here: This broke again, with the same fix working to fix it, after the Unraid 6.5.3 upgrade and reboot so it makes me think that the docker has the wrong config file set as the default.

-

According to this page: https://help.ubnt.com/hc/en-us/articles/115000441548-UniFi-Current-Controller-Versions 5.7 is Stable. Is that not how it is labeled in the APT repository?

-

I am seeing the same with the latest update, in addition, it seems to be chewing through my docker.img space at the rate of 1%/5min. The latest update really broke something

-

For me after several rounds with reboots it seems to have fixed itself. The problem hasn't come back in the last 5 days.

-

After several reboots it seems to have fixed itself. The problem hasn't come back in the last 5 days.

-

My issue as well is the same nginx error and I have 32Gb ram with 44% usage.

-

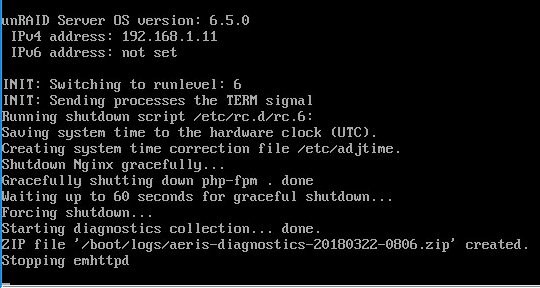

I am having quite a few issues that I suspect are all related. After a reboot, everthing works perfectly fine for a while (few hours) Then I get the following problems: WebGui Async loads stop working/loading. This affects: Docker section on the Dashboard (never loads) Docker tab (never loads) Fix Common Problems (Scanning model never goes away) Community Application (Loading spinner forever) The next reboot will hang on "stopping emhttpd" and never reboot. SSHing in and typing reboot will force the reboot. When this happens I get two types of errors in the syslog: Timeout Mar 19 09:35:17 Aeris nginx: 2018/03/19 09:35:17 [error] 2797#2797: *492724 upstream timed out (110: Connection timed out) while reading response header from upstream, client: 192.168.1.103, server: , request: "POST /webGui/include/DashboardApps.php HTTP/2.0", upstream: "fastcgi://unix:/var/run/php5-fpm.sock", host: "192.168.1.11", referrer: "https://192.168.1.11/Dashboard" Mar 19 10:34:14 Aeris emhttpd: req (5): csrf_token=****************&title=System+Log&cmd=%2FwebGui%2Fscripts%2Ftail_log&arg1=syslog Mar 19 10:34:14 Aeris emhttpd: cmd: /usr/local/emhttp/plugins/dynamix/scripts/tail_log syslog Mar 19 10:36:11 Aeris nginx: 2018/03/19 10:36:11 [error] 2797#2797: *501208 upstream timed out (110: Connection timed out) while reading response header from upstream, client: 192.168.1.103, server: , request: "POST /plugins/dynamix.docker.manager/include/DockerUpdate.php HTTP/2.0", upstream: "fastcgi://unix:/var/run/php5-fpm.sock", host: "aeris.local", referrer: "https://aeris.local/Docker" Mar 19 10:44:04 Aeris nginx: 2018/03/19 10:44:04 [error] 2797#2797: *502066 upstream timed out (110: Connection timed out) while reading response header from upstream, client: 192.168.1.103, server: , request: "POST /webGui/include/DashboardApps.php HTTP/2.0", upstream: "fastcgi://unix:/var/run/php5-fpm.sock", host: "1010b0199efcaf4121d36289812113a82758b8a5.unraid.net", referrer: "https://hash.unraid.net/Dashboard" Mar 19 10:48:16 Aeris nginx: 2018/03/19 10:48:16 [error] 2797#2797: *502066 upstream timed out (110: Connection timed out) while reading response header from upstream, client: 192.168.1.103, server: , request: "POST /plugins/dynamix.docker.manager/include/DockerUpdate.php HTTP/2.0", upstream: "fastcgi://unix:/var/run/php5-fpm.sock", host: "1010b0199efcaf4121d36289812113a82758b8a5.unraid.net", referrer: "https://hash.unraid.net/Docker" Call Trace: Mar 20 03:54:05 Aeris kernel: ------------[ cut here ]------------ Mar 20 03:54:05 Aeris kernel: WARNING: CPU: 0 PID: 3 at net/netfilter/nf_conntrack_core.c:769 __nf_conntrack_confirm+0x97/0x4d6 Mar 20 03:54:05 Aeris kernel: Modules linked in: macvlan xt_nat veth ipt_MASQUERADE nf_nat_masquerade_ipv4 iptable_nat nf_conntrack_ipv4 nf_defrag_ipv4 nf_nat_ipv4 iptable_filter ip_tables nf_nat xfs nfsd lockd grace sunrpc md_mod bonding e1000e igb ptp pps_core ipmi_ssif x86_pkg_temp_thermal intel_powerclamp coretemp kvm_intel kvm crct10dif_pclmul crc32_pclmul crc32c_intel ghash_clmulni_intel pcbc ast ttm aesni_intel drm_kms_helper aes_x86_64 crypto_simd glue_helper cryptd drm intel_cstate intel_uncore intel_rapl_perf agpgart i2c_i801 i2c_algo_bit i2c_core mpt3sas syscopyarea sysfillrect ahci sysimgblt fb_sys_fops libahci raid_class scsi_transport_sas video ie31200_edac backlight ipmi_si acpi_power_meter thermal acpi_pad button fan [last unloaded: pps_core] Mar 20 03:54:05 Aeris kernel: CPU: 0 PID: 3 Comm: kworker/0:0 Tainted: G W 4.14.26-unRAID #1 Mar 20 03:54:05 Aeris kernel: Hardware name: To Be Filled By O.E.M. To Be Filled By O.E.M./E3C236D2I, BIOS P2.30 07/20/2017 Mar 20 03:54:05 Aeris kernel: Workqueue: events macvlan_process_broadcast [macvlan] Mar 20 03:54:05 Aeris kernel: task: ffff88084bea1d00 task.stack: ffffc90003178000 Mar 20 03:54:05 Aeris kernel: RIP: 0010:__nf_conntrack_confirm+0x97/0x4d6 Mar 20 03:54:05 Aeris kernel: RSP: 0018:ffff88086fc03d30 EFLAGS: 00010202 Mar 20 03:54:05 Aeris kernel: RAX: 0000000000000188 RBX: 000000000000cf32 RCX: 0000000000000001 Mar 20 03:54:05 Aeris kernel: RDX: 0000000000000001 RSI: 0000000000000001 RDI: ffffffff81c092c8 Mar 20 03:54:05 Aeris kernel: RBP: ffff8802a12c7b00 R08: 0000000000000101 R09: ffff880213aa1500 Mar 20 03:54:05 Aeris kernel: R10: ffff88068eb0884e R11: 0000000000000000 R12: ffffffff81c8b040 Mar 20 03:54:05 Aeris kernel: R13: 00000000000071a1 R14: ffff88041537db80 R15: ffff88041537dbd8 Mar 20 03:54:05 Aeris kernel: FS: 0000000000000000(0000) GS:ffff88086fc00000(0000) knlGS:0000000000000000 Mar 20 03:54:05 Aeris kernel: CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033 Mar 20 03:54:05 Aeris kernel: CR2: 0000152f80165890 CR3: 0000000001c0a004 CR4: 00000000003606f0 Mar 20 03:54:05 Aeris kernel: DR0: 0000000000000000 DR1: 0000000000000000 DR2: 0000000000000000 Mar 20 03:54:05 Aeris kernel: DR3: 0000000000000000 DR6: 00000000fffe0ff0 DR7: 0000000000000400 Mar 20 03:54:05 Aeris kernel: Call Trace: Mar 20 03:54:05 Aeris kernel: <IRQ> Mar 20 03:54:05 Aeris kernel: ipv4_confirm+0xac/0xb4 [nf_conntrack_ipv4] Mar 20 03:54:05 Aeris kernel: nf_hook_slow+0x37/0x96 Mar 20 03:54:05 Aeris kernel: ip_local_deliver+0xab/0xd3 Mar 20 03:54:05 Aeris kernel: ? inet_del_offload+0x3e/0x3e Mar 20 03:54:05 Aeris kernel: ip_rcv+0x311/0x346 Mar 20 03:54:05 Aeris kernel: ? ip_local_deliver_finish+0x1b8/0x1b8 Mar 20 03:54:05 Aeris kernel: __netif_receive_skb_core+0x6ba/0x733 Mar 20 03:54:05 Aeris kernel: ? e1000_irq_enable+0x5a/0x67 [e1000e] Mar 20 03:54:05 Aeris kernel: process_backlog+0x8c/0x12d Mar 20 03:54:05 Aeris kernel: net_rx_action+0xfb/0x24f Mar 20 03:54:05 Aeris kernel: __do_softirq+0xcd/0x1c2 Mar 20 03:54:05 Aeris kernel: do_softirq_own_stack+0x2a/0x40 Mar 20 03:54:05 Aeris kernel: </IRQ> Mar 20 03:54:05 Aeris kernel: do_softirq+0x46/0x52 Mar 20 03:54:05 Aeris kernel: netif_rx_ni+0x21/0x35 Mar 20 03:54:05 Aeris kernel: macvlan_broadcast+0x117/0x14f [macvlan] Mar 20 03:54:05 Aeris kernel: ? __clear_rsb+0x25/0x3d Mar 20 03:54:05 Aeris kernel: macvlan_process_broadcast+0xe4/0x114 [macvlan] Mar 20 03:54:05 Aeris kernel: process_one_work+0x14c/0x23f Mar 20 03:54:05 Aeris kernel: ? rescuer_thread+0x258/0x258 Mar 20 03:54:05 Aeris kernel: worker_thread+0x1c3/0x292 Mar 20 03:54:05 Aeris kernel: kthread+0x111/0x119 Mar 20 03:54:05 Aeris kernel: ? kthread_create_on_node+0x3a/0x3a Mar 20 03:54:05 Aeris kernel: ? kthread_create_on_node+0x3a/0x3a Mar 20 03:54:05 Aeris kernel: ret_from_fork+0x35/0x40 Mar 20 03:54:05 Aeris kernel: Code: 48 c1 eb 20 89 1c 24 e8 24 f9 ff ff 8b 54 24 04 89 df 89 c6 41 89 c5 e8 a9 fa ff ff 84 c0 75 b9 49 8b 86 80 00 00 00 a8 08 74 02 <0f> 0b 4c 89 f7 e8 03 ff ff ff 49 8b 86 80 00 00 00 0f ba e0 09 Mar 20 03:54:05 Aeris kernel: ---[ end trace 52bfcc756559bffe ]--- Anyone have any ideas? It is getting really annoying to reboot (and then ssh and force reboot) every time I want to manage dockers, view the community applications or check Fix Common Problems. aeris-diagnostics-20180322-0806.zip

-

I also have this issue. Rebooting it fixes it for a couple hours then it comes back. The issue also affects the docker display on the dashboard for me as well. For me, the Fix Common Problems also becomes unusable, when you load the tab it scans forever and never finishes.

-

unRAID OS version 6.5.0 Stable Release Available

yippy3000 replied to limetech's topic in Announcements

I did the first time around but here are my diagnostics again after the docker page stopped loading again. aeris-diagnostics-20180319-0827.zip -

unRAID OS version 6.5.0 Stable Release Available

yippy3000 replied to limetech's topic in Announcements

And after a few days the issues is back. WebGUI won't load the docker status and the logs show this: Mar 18 10:23:59 Aeris nginx: 2018/03/18 10:23:59 [error] 2797#2797: *349587 upstream timed out (110: Connection timed out) while reading response header from upstream, client: 192.168.1.103, server: , request: "POST /plugins/dynamix.docker.manager/include/DockerUpdate.php HTTP/2.0", upstream: "fastcgi://unix:/var/run/php5-fpm.sock", host: "192.168.1.11", referrer: "https://192.168.1.11/Docker" -

unRAID OS version 6.5.0 Stable Release Available

yippy3000 replied to limetech's topic in Announcements

I did reboot after the upgrade. A second reboot hung but now things seem to working after I forced the reboot via SSH -

unRAID OS version 6.5.0 Stable Release Available

yippy3000 replied to limetech's topic in Announcements

Just rebooted. It hung on reboot and dumped a diagnostic log. SSHed in and sent the reboot command and now things seem to be working but aeris-diagnostics-20180316-0936.zip -

unRAID OS version 6.5.0 Stable Release Available

yippy3000 replied to limetech's topic in Announcements

I am having this exact same issue too. Dockers are working but I can't manage them anymore! -

I am getting some call traces to do with the IP stack and also getting timeouts when trying to load certain pages in the web gui, specifically the Docker and fix common problems pages. Timeout message: Mar 16 08:16:18 Aeris root: Fix Common Problems Version 2018.03.15a Mar 16 08:18:18 Aeris nginx: 2018/03/16 08:18:18 [error] 6475#6475: *151961 upstream timed out (110: Connection timed out) while reading response header from upstream, client: 192.168.1.103, server: , request: "POST /plugins/fix.common.problems/include/fixExec.php HTTP/2.0", upstream: "fastcgi://unix:/var/run/php5-fpm.sock", host: "1010b0199efcaf4121d36289812113a82758b8a5.unraid.net", referrer: "https://1010b0199efcaf4121d36289812113a82758b8a5.unraid.net/Settings/FixProblems" Call Trace: Mar 16 08:31:32 Aeris kernel: ------------[ cut here ]------------ Mar 16 08:31:32 Aeris kernel: WARNING: CPU: 0 PID: 63 at net/netfilter/nf_conntrack_core.c:769 __nf_conntrack_confirm+0x97/0x4d6 Mar 16 08:31:32 Aeris kernel: Modules linked in: macvlan xt_nat veth ipt_MASQUERADE nf_nat_masquerade_ipv4 iptable_nat nf_conntrack_ipv4 nf_defrag_ipv4 nf_nat_ipv4 iptable_filter ip_tables nf_nat xfs nfsd lockd grace sunrpc md_mod bonding e1000e igb ptp pps_core ipmi_ssif x86_pkg_temp_thermal intel_powerclamp coretemp kvm_intel kvm crct10dif_pclmul crc32_pclmul crc32c_intel ghash_clmulni_intel pcbc ast ttm drm_kms_helper aesni_intel aes_x86_64 crypto_simd glue_helper cryptd drm intel_cstate intel_uncore intel_rapl_perf agpgart i2c_i801 i2c_algo_bit i2c_core mpt3sas ahci libahci syscopyarea sysfillrect raid_class sysimgblt ie31200_edac video scsi_transport_sas fb_sys_fops backlight acpi_power_meter thermal acpi_pad button fan ipmi_si [last unloaded: pps_core] Mar 16 08:31:32 Aeris kernel: CPU: 0 PID: 63 Comm: kworker/0:1 Tainted: G W 4.14.26-unRAID #1 Mar 16 08:31:32 Aeris kernel: Hardware name: To Be Filled By O.E.M. To Be Filled By O.E.M./E3C236D2I, BIOS P2.30 07/20/2017 Mar 16 08:31:32 Aeris kernel: Workqueue: events macvlan_process_broadcast [macvlan] Mar 16 08:31:32 Aeris kernel: task: ffff88084bae2b80 task.stack: ffffc90003388000 Mar 16 08:31:32 Aeris kernel: RIP: 0010:__nf_conntrack_confirm+0x97/0x4d6 Mar 16 08:31:32 Aeris kernel: RSP: 0018:ffff88086fc03d30 EFLAGS: 00010202 Mar 16 08:31:32 Aeris kernel: RAX: 0000000000000188 RBX: 0000000000009595 RCX: 0000000000000001 Mar 16 08:31:32 Aeris kernel: RDX: 0000000000000001 RSI: 0000000000000001 RDI: ffffffff81c08ef8 Mar 16 08:31:32 Aeris kernel: RBP: ffff8807fee9f900 R08: 0000000000000101 R09: ffff880311d87c00 Mar 16 08:31:32 Aeris kernel: R10: ffff88048eaa084e R11: 0000000000000000 R12: ffffffff81c8b040 Mar 16 08:31:32 Aeris kernel: R13: 000000000000da3e R14: ffff88073b2228c0 R15: ffff88073b222918 Mar 16 08:31:32 Aeris kernel: FS: 0000000000000000(0000) GS:ffff88086fc00000(0000) knlGS:0000000000000000 Mar 16 08:31:32 Aeris kernel: CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033 Mar 16 08:31:32 Aeris kernel: CR2: 00001525b43f3000 CR3: 0000000001c0a006 CR4: 00000000003606f0 Mar 16 08:31:32 Aeris kernel: DR0: 0000000000000000 DR1: 0000000000000000 DR2: 0000000000000000 Mar 16 08:31:32 Aeris kernel: DR3: 0000000000000000 DR6: 00000000fffe0ff0 DR7: 0000000000000400 Mar 16 08:31:32 Aeris kernel: Call Trace: Mar 16 08:31:32 Aeris kernel: <IRQ> Mar 16 08:31:32 Aeris kernel: ipv4_confirm+0xac/0xb4 [nf_conntrack_ipv4] Mar 16 08:31:32 Aeris kernel: nf_hook_slow+0x37/0x96 Mar 16 08:31:32 Aeris kernel: ip_local_deliver+0xab/0xd3 Mar 16 08:31:32 Aeris kernel: ? inet_del_offload+0x3e/0x3e Mar 16 08:31:32 Aeris kernel: ip_rcv+0x311/0x346 Mar 16 08:31:32 Aeris kernel: ? ip_local_deliver_finish+0x1b8/0x1b8 Mar 16 08:31:32 Aeris kernel: __netif_receive_skb_core+0x6ba/0x733 Mar 16 08:31:32 Aeris kernel: ? e1000_irq_enable+0x5a/0x67 [e1000e] Mar 16 08:31:32 Aeris kernel: process_backlog+0x8c/0x12d Mar 16 08:31:32 Aeris kernel: net_rx_action+0xfb/0x24f Mar 16 08:31:32 Aeris kernel: __do_softirq+0xcd/0x1c2 Mar 16 08:31:32 Aeris kernel: do_softirq_own_stack+0x2a/0x40 Mar 16 08:31:32 Aeris kernel: </IRQ> Mar 16 08:31:32 Aeris kernel: do_softirq+0x46/0x52 Mar 16 08:31:32 Aeris kernel: netif_rx_ni+0x21/0x35 Mar 16 08:31:32 Aeris kernel: macvlan_broadcast+0x117/0x14f [macvlan] Mar 16 08:31:32 Aeris kernel: ? __clear_rsb+0x25/0x3d Mar 16 08:31:32 Aeris kernel: macvlan_process_broadcast+0xe4/0x114 [macvlan] Mar 16 08:31:32 Aeris kernel: process_one_work+0x14c/0x23f Mar 16 08:31:32 Aeris kernel: ? rescuer_thread+0x258/0x258 Mar 16 08:31:32 Aeris kernel: worker_thread+0x1c3/0x292 Mar 16 08:31:32 Aeris kernel: kthread+0x111/0x119 Mar 16 08:31:32 Aeris kernel: ? kthread_create_on_node+0x3a/0x3a Mar 16 08:31:32 Aeris kernel: ? call_usermodehelper_exec_async+0x116/0x11d Mar 16 08:31:32 Aeris kernel: ret_from_fork+0x35/0x40 Mar 16 08:31:32 Aeris kernel: Code: 48 c1 eb 20 89 1c 24 e8 24 f9 ff ff 8b 54 24 04 89 df 89 c6 41 89 c5 e8 a9 fa ff ff 84 c0 75 b9 49 8b 86 80 00 00 00 a8 08 74 02 <0f> 0b 4c 89 f7 e8 03 ff ff ff 49 8b 86 80 00 00 00 0f ba e0 09 Mar 16 08:31:32 Aeris kernel: ---[ end trace 99731e0c4f1f7d5b ]--- aeris-diagnostics-20180316-0836.zip

-

I am getting the same timeout issue ever since I updated to 6.5. I am unable to load the Docker or Fix Common Problems pages. Everything else works

-

Looks like 5.7.20 is now on the downloads page. Will the docker be getting the 5.7.x track soon or are you staying with the LTS stream only which could mean being stuck on 5.6 for a while (I am waiting for some features in 5.7)?

-

My whole cache seems to be hosed. BTRFS is doing some serious crunching on something right now after I removed the questionable drive from the cache pool. Will see if that ever comes back up up with a single drive or if I need to rebuild everything (I have backups!). Still don't know if the drive is bad though but suspecting it might be.

-

I did: My whole cache seems to be hosed. BTRFS is doing some serious crunching on something right now after I removed the questionable drive from the cache pool. Will see if that ever comes back up up with a single drive or if I need to rebuild everything (I have backups!). Still don't know if the drive is bad though but suspecting it might be.