-

Posts

698 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by zoggy

-

same as well, was smooth before and after. figured might as well take the low risk gamble

-

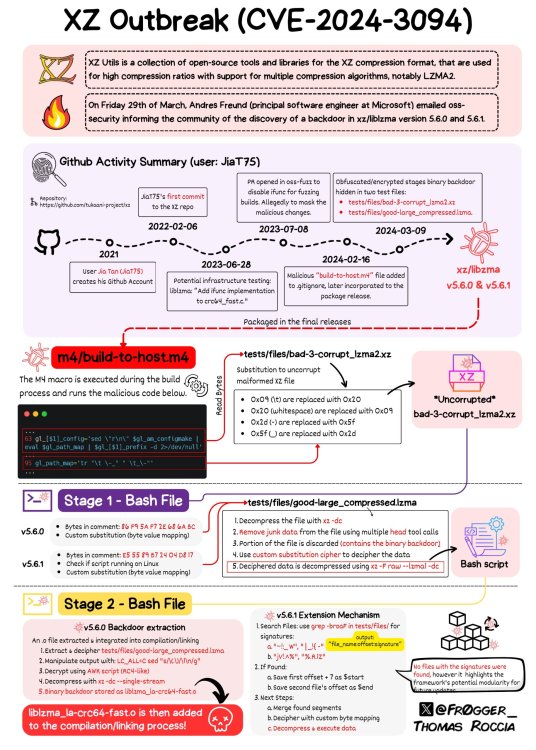

https://arstechnica.com/security/2024/04/what-we-know-about-the-xz-utils-backdoor-that-almost-infected-the-world/ pretty cool infographic that shows the timeline and all the stuff involved. can see theres a few safeguards to prevent it from being detected/running.. but also prob a bit of other unknown things we yet to discover. included link to article which covers a bit more info for those that are curious

-

there is also this scanner: https://xz.fail/ info: https://www.bleepingcomputer.com/news/security/new-xz-backdoor-scanner-detects-implant-in-any-linux-binary/ for those that dont want to rely on running xz to check versions. ldd /path/to/xz then drop the liblzma.so* file into the scanner

-

Sending notifications when options unchecked.

zoggy commented on CorneliousJD's report in Stable Releases

6.12.7rc2, just to note this bug is still outstanding: -

updated without any issues

-

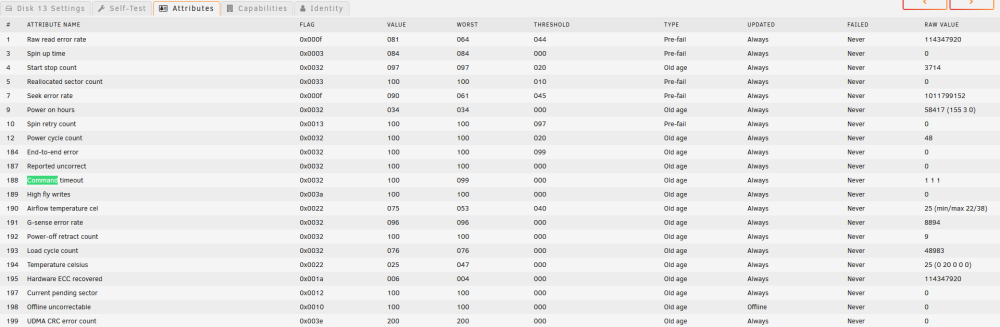

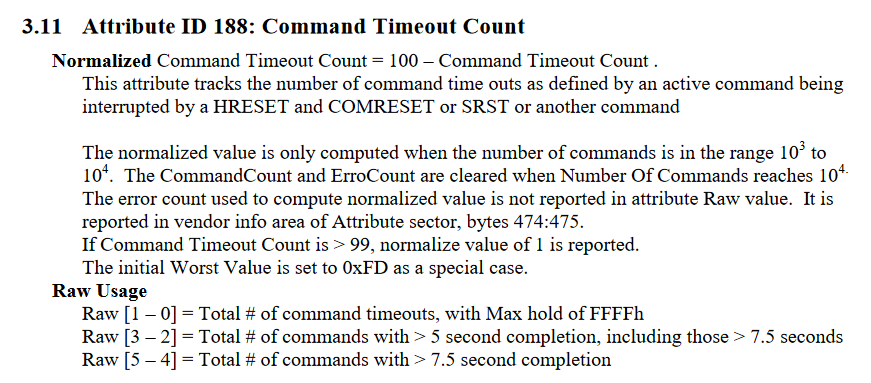

unraid 6.12.6 - after updating locations to 2024.02.10-o24, now i have a drive that is flashing which stops if i uncheck 'flash warning' but its not clear what the warning its trying to show... recheck and on hover its: spun disk up, now instead of N/A it says 0 there. i'm guessing the warnings are from: is the plugin just not happy with the 'command timeout' value from segates now? 1 1 1 are three different buckets, so i would imagine you would take the max value of all three buckets.. in this case 1 and compare to value which is less than 5.. so shouldnt be warning. docs on the value and how segate does it https://t1.daumcdn.net/brunch/service/user/axm/file/zRYOdwPu3OMoKYmBOby1fEEQEbU.pdf

-

just fyi had someone reach out to sab about your container with unrar being broken as is just wouldnt extract files during direct unpack or not: 2024-01-25 14:51:03,438::DEBUG::[directunpacker:347] DirectUnpack Unrar output: UNRAR 7.00 beta 3 freeware Copyright (c) 1993-2023 Alexander Roshal ... 2024-01-25 14:51:31,550::DEBUG::[newsunpack:860] UNRAR output: UNRAR 7.00 beta 3 freeware Copyright (c) 1993-2023 Alexander Roshal 2024-01-25 14:51:31,550::INFO::[newsunpack:863] Unpacked 0 files/folders in 0 seconds I had them switch to linuxserver docker to test and everything worked fine. I sent them your side up to share relevant logs and info (dunno if they have done that just yet) - just sharing here in case they never make it. btw, i personally run the unrar 7 betas without any issues.

-

after updating to latest 13.x + you probably see the app 500s when loading. this is due to change, go add variable APP_KEY and set it using the following instructions: https://docs.speedtest-tracker.dev/faqs#i-get-a-warning-on-container-start-up-that-the-app_key-is-missing then it will load just fine again.

-

looking at logs, i see smbd core'd a few days ago (it then auto recovered). i never noticed anything on my side. just noting logs here in case others have seen Aug 19 20:30:27 husky smbd[15075]: [2023/08/19 20:30:27.828234, 0] ../../source3/smbd/close.c:1397(close_directory) Aug 19 20:30:27 husky smbd[15075]: close_directory: Could not get share mode lock for TV/Foundation 2021 Aug 19 20:30:27 husky smbd[15075]: [2023/08/19 20:30:27.828285, 0] ../../source3/smbd/fd_handle.c:39(fd_handle_destructor) Aug 19 20:30:27 husky smbd[15075]: PANIC: assert failed at ../../source3/smbd/fd_handle.c(39): (fh->fd == -1) || (fh->fd == AT_FDCWD) Aug 19 20:30:27 husky smbd[15075]: [2023/08/19 20:30:27.828296, 0] ../../lib/util/fault.c:173(smb_panic_log) Aug 19 20:30:27 husky smbd[15075]: =============================================================== Aug 19 20:30:27 husky smbd[15075]: [2023/08/19 20:30:27.828315, 0] ../../lib/util/fault.c:174(smb_panic_log) Aug 19 20:30:27 husky smbd[15075]: INTERNAL ERROR: assert failed: (fh->fd == -1) || (fh->fd == AT_FDCWD) in pid 15075 (4.17.7) Aug 19 20:30:27 husky smbd[15075]: [2023/08/19 20:30:27.828324, 0] ../../lib/util/fault.c:178(smb_panic_log) Aug 19 20:30:27 husky smbd[15075]: If you are running a recent Samba version, and if you think this problem is not yet fixed in the latest versions, please consider reporting this bug, see https://wiki.samba.org/index.php/Bug_Reporting Aug 19 20:30:27 husky smbd[15075]: [2023/08/19 20:30:27.828333, 0] ../../lib/util/fault.c:183(smb_panic_log) Aug 19 20:30:27 husky smbd[15075]: =============================================================== Aug 19 20:30:27 husky smbd[15075]: [2023/08/19 20:30:27.828348, 0] ../../lib/util/fault.c:184(smb_panic_log) Aug 19 20:30:27 husky smbd[15075]: PANIC (pid 15075): assert failed: (fh->fd == -1) || (fh->fd == AT_FDCWD) in 4.17.7 Aug 19 20:30:27 husky smbd[15075]: [2023/08/19 20:30:27.828708, 0] ../../lib/util/fault.c:292(log_stack_trace) Aug 19 20:30:27 husky smbd[15075]: BACKTRACE: 32 stack frames: Aug 19 20:30:27 husky smbd[15075]: #0 /usr/lib64/libgenrand-samba4.so(log_stack_trace+0x2e) [0x15498340e64e] Aug 19 20:30:27 husky smbd[15075]: #1 /usr/lib64/libgenrand-samba4.so(smb_panic+0x9) [0x15498340e8a9] Aug 19 20:30:27 husky smbd[15075]: #2 /usr/lib64/libsmbd-base-samba4.so(+0x4d10b) [0x1549837f010b] Aug 19 20:30:27 husky smbd[15075]: #3 /usr/lib64/libtalloc.so.2(+0x44df) [0x1549833bd4df] Aug 19 20:30:27 husky smbd[15075]: #4 /usr/lib64/libsmbd-base-samba4.so(file_free+0xd6) [0x1549837fd276] Aug 19 20:30:27 husky smbd[15075]: #5 /usr/lib64/libsmbd-base-samba4.so(close_file_free+0x29) [0x15498382da99] Aug 19 20:30:27 husky smbd[15075]: #6 /usr/lib64/libsmbd-base-samba4.so(+0x5cfd6) [0x1549837fffd6] Aug 19 20:30:27 husky smbd[15075]: #7 /usr/lib64/libsmbd-base-samba4.so(+0x5d122) [0x154983800122] Aug 19 20:30:27 husky smbd[15075]: #8 /usr/lib64/libsmbd-base-samba4.so(files_forall+0x19) [0x1549837fc0a9] Aug 19 20:30:27 husky smbd[15075]: #9 /usr/lib64/libsmbd-base-samba4.so(file_close_conn+0x44) [0x1549837fc114] Aug 19 20:30:27 husky smbd[15075]: #10 /usr/lib64/libsmbd-base-samba4.so(close_cnum+0x67) [0x154983848d27] Aug 19 20:30:27 husky smbd[15075]: #11 /usr/lib64/libsmbd-base-samba4.so(smbXsrv_tcon_disconnect+0x4e) [0x15498387c00e] Aug 19 20:30:27 husky smbd[15075]: #12 /usr/lib64/libsmbd-base-samba4.so(+0xbbc02) [0x15498385ec02] Aug 19 20:30:27 husky smbd[15075]: #13 /usr/lib64/libtevent.so.0(tevent_common_invoke_immediate_handler+0x17a) [0x1549833d0e2a] Aug 19 20:30:27 husky smbd[15075]: #14 /usr/lib64/libtevent.so.0(tevent_common_loop_immediate+0x16) [0x1549833d0e46] Aug 19 20:30:27 husky smbd[15075]: #15 /usr/lib64/libtevent.so.0(+0xebfb) [0x1549833d6bfb] Aug 19 20:30:27 husky smbd[15075]: #16 /usr/lib64/libtevent.so.0(+0xcef7) [0x1549833d4ef7] Aug 19 20:30:27 husky smbd[15075]: #17 /usr/lib64/libtevent.so.0(_tevent_loop_once+0x91) [0x1549833cfba1] Aug 19 20:30:27 husky smbd[15075]: #18 /usr/lib64/libtevent.so.0(tevent_common_loop_wait+0x1b) [0x1549833cfe7b] Aug 19 20:30:27 husky smbd[15075]: #19 /usr/lib64/libtevent.so.0(+0xce97) [0x1549833d4e97] Aug 19 20:30:27 husky smbd[15075]: #20 /usr/lib64/libsmbd-base-samba4.so(smbd_process+0x817) [0x154983846a37] Aug 19 20:30:27 husky smbd[15075]: #21 /usr/sbin/smbd(+0xb090) [0x5654df159090] Aug 19 20:30:27 husky smbd[15075]: #22 /usr/lib64/libtevent.so.0(tevent_common_invoke_fd_handler+0x91) [0x1549833d08c1] Aug 19 20:30:27 husky smbd[15075]: #23 /usr/lib64/libtevent.so.0(+0xee07) [0x1549833d6e07] Aug 19 20:30:27 husky smbd[15075]: #24 /usr/lib64/libtevent.so.0(+0xcef7) [0x1549833d4ef7] Aug 19 20:30:27 husky smbd[15075]: #25 /usr/lib64/libtevent.so.0(_tevent_loop_once+0x91) [0x1549833cfba1] Aug 19 20:30:27 husky smbd[15075]: #26 /usr/lib64/libtevent.so.0(tevent_common_loop_wait+0x1b) [0x1549833cfe7b] Aug 19 20:30:27 husky smbd[15075]: #27 /usr/lib64/libtevent.so.0(+0xce97) [0x1549833d4e97] Aug 19 20:30:27 husky smbd[15075]: #28 /usr/sbin/smbd(main+0x1489) [0x5654df156259] Aug 19 20:30:27 husky smbd[15075]: #29 /lib64/libc.so.6(+0x236b7) [0x1549831d76b7] Aug 19 20:30:27 husky smbd[15075]: #30 /lib64/libc.so.6(__libc_start_main+0x85) [0x1549831d7775] Aug 19 20:30:27 husky smbd[15075]: #31 /usr/sbin/smbd(_start+0x21) [0x5654df156b31] Aug 19 20:30:27 husky smbd[15075]: [2023/08/19 20:30:27.828898, 0] ../../source3/lib/dumpcore.c:315(dump_core) Aug 19 20:30:27 husky smbd[15075]: dumping core in /var/log/samba/cores/smbd no actual core present in the dir :/var/log/samba/cores/smbd# ls -alh total 0 drwx------ 2 root root 40 Jul 14 22:48 ./ drwx------ 9 root root 180 Jul 14 22:49 ../ husky-diagnostics-20230823-0049.zip

-

ive heard this is a solution to use, https://github.com/tiredofit/docker-db-backup you basically dont touch your actual db, and just have that docker run backups periodically from it to a place of your choosing which you back that up.

-

[Support] D34DC3N73R - Netdata GLIBC (GPU Enabled)

zoggy replied to D34DC3N73R's topic in Docker Containers

netdata changed a bit of stuff recently (their icon are svg), so fallout is that unraid netdata template's icon is no longer there. Since unraid doesnt support loading svg, the easy fix for icon is just to point to old image from old version when it was still there: https://raw.githubusercontent.com/netdata/netdata/v1.40/web/gui/dashboard/images/ms-icon-70x70.png -

if it helps track this down: i do not use nfs, it is set to no in unraid.

-

Jun 30 16:55:37 husky unraid-api[8238]: ✔️ UNRAID API started successfully! Jun 30 16:55:39 husky rpc.statd[8351]: Version 2.6.2 starting Jun 30 16:55:39 husky rpc.statd[8353]: Version 2.6.2 starting Jun 30 16:55:39 husky sm-notify[8352]: Version 2.6.2 starting Jun 30 16:55:39 husky rpc.statd[8351]: Failed to read /var/lib/nfs/state: Success Jun 30 16:55:39 husky rpc.statd[8351]: Initializing NSM state Jun 30 16:55:39 husky sm-notify[8354]: Version 2.6.2 starting Jun 30 16:55:39 husky sm-notify[8354]: Already notifying clients; Exiting! Jun 30 16:55:39 husky rpc.statd[8353]: Failed to register (statd, 1, udp): svc_reg() err: RPC: Success Jun 30 16:55:39 husky rpc.statd[8351]: Failed to register (statd, 1, tcp): svc_reg() err: RPC: Success Jun 30 16:55:39 husky rpc.statd[8351]: Failed to register (statd, 1, udp6): svc_reg() err: RPC: Success Jun 30 16:55:39 husky rpc.statd[8351]: Failed to register (statd, 1, tcp6): svc_reg() err: RPC: Success Jun 30 16:55:41 husky kernel: RPC: Registered named UNIX socket transport module. Jun 30 16:55:41 husky kernel: RPC: Registered udp transport module. Jun 30 16:55:41 husky kernel: RPC: Registered tcp transport module. Jun 30 16:55:41 husky kernel: RPC: Registered tcp NFSv4.1 backchannel transport module. Jun 30 16:55:41 husky rpc.nfsd[8486]: unable to bind AF_INET TCP socket: errno 98 (Address already in use) Jun 30 16:55:41 husky rpc.nfsd[8485]: unable to bind AF_INET TCP socket: errno 98 (Address already in use) Jun 30 16:55:42 husky kernel: NFSD: Using UMH upcall client tracking operations. Jun 30 16:55:42 husky kernel: NFSD: starting 90-second grace period (net f0000000) Jun 30 16:55:42 husky rpc.mountd[8507]: Failed to register (mountd, 1, udp): svc_reg() err: RPC: Success Jun 30 16:55:42 husky rpc.mountd[8507]: Failed to register (mountd, 1, tcp): svc_reg() err: RPC: Success Jun 30 16:55:42 husky rpc.mountd[8507]: Failed to register (mountd, 1, udp6): svc_reg() err: RPC: Success Jun 30 16:55:42 husky rpc.mountd[8507]: Failed to register (mountd, 1, tcp6): svc_reg() err: RPC: Success Jun 30 16:55:42 husky rpc.mountd[8507]: Failed to register (mountd, 2, udp): svc_reg() err: RPC: Success Jun 30 16:55:42 husky rpc.mountd[8507]: Failed to register (mountd, 2, tcp): svc_reg() err: RPC: Success Jun 30 16:55:42 husky rpc.mountd[8507]: Failed to register (mountd, 2, udp6): svc_reg() err: RPC: Success Jun 30 16:55:42 husky rpc.mountd[8507]: Failed to register (mountd, 2, tcp6): svc_reg() err: RPC: Success Jun 30 16:55:42 husky rpc.mountd[8507]: Failed to register (mountd, 3, udp): svc_reg() err: RPC: Success Jun 30 16:55:42 husky rpc.mountd[8507]: Failed to register (mountd, 3, tcp): svc_reg() err: RPC: Success Jun 30 16:55:42 husky rpc.mountd[8508]: Version 2.6.2 starting Jun 30 16:55:42 husky rpc.mountd[8507]: Failed to register (mountd, 3, udp6): svc_reg() err: RPC: Success Jun 30 16:55:42 husky rpc.mountd[8507]: Failed to register (mountd, 3, tcp6): svc_reg() err: RPC: Success Jun 30 16:55:42 husky rpc.mountd[8507]: mountd: No V2 or V3 listeners created! Jun 30 16:55:42 husky rpc.mountd[8510]: Version 2.6.2 starting Just upgraded to 6.12.2 from 6.12.1 and seeing some new entries in log after booting. looking at 6.12.1 logs, it normally looks like: Jun 20 17:08:42 husky unraid-api[8534]: ✔️ UNRAID API started successfully! Jun 20 17:08:43 husky kernel: RPC: Registered named UNIX socket transport module. Jun 20 17:08:43 husky kernel: RPC: Registered udp transport module. Jun 20 17:08:43 husky kernel: RPC: Registered tcp transport module. Jun 20 17:08:43 husky kernel: RPC: Registered tcp NFSv4.1 backchannel transport module. Jun 20 17:08:43 husky rpc.nfsd[8619]: unable to bind AF_INET TCP socket: errno 98 (Address already in use) Jun 20 17:08:43 husky rpc.nfsd[8619]: unable to bind AF_INET TCP socket: errno 98 (Address already in use) Jun 20 17:08:43 husky rpc.nfsd[8619]: unable to set any sockets for nfsd Jun 20 17:08:44 husky kernel: NFSD: Using UMH upcall client tracking operations. Jun 20 17:08:44 husky kernel: NFSD: starting 90-second grace period (net f0000000) husky-diagnostics-20230630-1747.zip

-

Tips and Tweaks Plugin to possibly improve performance of Unraid and VMs

zoggy replied to dlandon's topic in Plugin Support

tested when upgrading to 6.12.1 and yep worked fine. so no idea what happened on the 6.11.5->6.12.0, gremlins. -

main tab > array operations > move -- Move will immediately invoke the Mover. (Schedule)

-

6.11.5 -> 6.12 nvidia driver upgrade when legacy driver is set

zoggy commented on zoggy's report in Stable Releases

Thanks for the detailed response, makes sense. It makes sense now what happen. About the card, I just needed a card in the box to use with monitor from time to time / pass to vm as there is no igpu. It was cheap (was like $40) x1 card that is fanless/single slot. I dont need transcoding so no worries -

the old ca backup app used to just inherit how the dockers were sorted in unraid docker gui. it would show them in "unraid docker gui" reverse order, which is how it would stop them. then when it starts back up it does the reverse of that (aka, the normal "unraid docker page gui" order)

-

ok took me a sec but I see, by default it only backs up the "internal volume", but then down below you can exclude things from within that via the selector At first glance i didnt realize you could drill down, but I see now. Thanks! Dunno how hard it would be to have that tree auto expand to the 'internal volume' path, but prob not worth the hassle

-

How would one exclude a folder/file from the flash backup? As the nvidia plugin driver is ~250M which I would not want to include "config/plugins/nvidia-driver/packages/*"

-

upgraded to 6.12, got the warning that i needed to install appdata backup. did so, it found my ca backup2 and asked to migrate which pulled over most things. i noticed it did NOT pull over my exclude list which is: .kodi-novnc/.kodi/userdata/Thumbnails,/mnt/cache/appdata/mariadb-10.6/ Which i assume is understandable since now users would be expected to put said exclusions on each container where it made sense and what not. But would prob be good to note this in the migrate blurp that it doesn't handle this. which i guess its not clear now, so in the container where you can exclude.. previously the exclude list operated from the parent folder of the appdata folder, so exclude was: .kodi-novnc/.kodi/userdata/Thumbnails but with this app, does the exclude path get constructed from the container root folder or none.? as now my kodi container base is: /mnt/cache/appdata/.kodi-novnc so would I have to do: /mnt/cache/appdata/.kodi-novnc/.kodi/userdata/Thumbnails or: .kodi/userdata/Thumbnails or: Thumbnails

-

On the dashboard, the "system information" main panel you can not remove it from being shown. The info it shows is somewhat redundant as its shown in the top banner, but more importantly there is icons to stop/restart/shutdown array that one could easily accidentally click. Please allow users to remove this panel Also, I'm shocked you did not put the 'mover' button on this or the cache panel.. feature request?

-

6.11.5 -> 6.12 nvidia driver upgrade when legacy driver is set

zoggy posted a report in Stable Releases

I have a NVIDIA GeForce GT 710, which is not supported in the 500 series drivers, so I'm forced to use the legacy driver (v470.182.03). This is set with the Nvidia Driver plugin. While on 6.11.5 and doing the update os to 6.12, it fires off the plugin helper which upgrades nvidia driver to latest v535.54.03. This was suboptimal as this driver does not support my video card. It would be nice if the plugin helper would ignore upgrading if the user is using the legacy 470.x drivers (As its probably by design). Before rebooting to have it boot up on 6.12, I tried downgrading back to 470.141.03, which it said it did. Jun 15 16:50:00 husky emhttpd: cmd: /usr/local/emhttp/plugins/nvidia-driver/include/exec.sh update_version 470.141.03 Once updated+downloaded, rebooting to 6.12 it booted up with nvidia driver v535.54.03 Throwing message about my video card not supported and I need to use 470.x. I downgraded again and rebooted, this time it DID boot up on 470 Unable to provide logs to show more details since the tips and tricks plugin 'Enable syslog Archiving?' looks to have stopped working on 6.12. So the only 6.12 logs I have is the current log where it shows it loading the correct 470 legacy drivers as expected. -

Tips and Tweaks Plugin to possibly improve performance of Unraid and VMs

zoggy replied to dlandon's topic in Plugin Support

anyone else seeing that since upgrading to 6.12, the tips and tricks plugin 'Enable syslog Archiving?' option no longer results in the syslog getting archived on restart? i had an issue after upgrading to 6.12 and had to restart to fix, I went to get logs and noticed my last saved logs was the 6.11.5->6.12 reboot. I've not had a chance to restart for a 3rd time to see if it was just an issue during the first reboot of an upgrade or what -

Windows 10 w/ Firefox 114.0.1 The notifications menu dropdown causes horz scrollbar, and you can see is slightly misaligned

-

May 27 08:05:07 husky root: Hard File Path: /mnt/cache/TV/TV/Shark Tank/Season 14/<removed>.mkv May 27 08:05:07 husky root: LINK Count: 1 May 27 08:05:07 husky root: Hard Link Status: false ... May 27 08:35:18 husky move: file: /mnt/cache/TV/TV/Shark Tank/Season 14/<removed>.mkv May 27 08:35:30 husky root: mover: finished When mover fires off there is a bit of log spam for hard link stuff.. then the actual mover log. Any way a user can turn off the hard link set of logs?