Leaderboard

Popular Content

Showing content with the highest reputation on 10/27/17 in all areas

-

I was asked by another user to put this update in this thread as well, but it appears the issue relating to Nested Page Tables with AMD platforms has been resolved via a patch created by Paolo Bonzini: https://marc.info/?l=kvm&m=150891016802546&w=2 Another user actually found the troublesome code segment, supplied a hack of a patch, then Paolo saw the real problem and implemented a proper solution. That said, it hasn't made its way into a full release of the kernel yet, so we are going to patch it in manually with the release of the next rc. Needless to say, we are incredibly excited if this patch truly does resolve all these nasty GPU pass through performance problems. Ryzen offers a strong use-case with unRAID and VMs with GPU pass through so long as it can keep on par with Intel for performance and price. I'm excited to hear what you guys achieve after the next update. All the best, Jon6 points

-

Ok, I know what happened with the issue you reported, FreeMan. The bottom line is that no files/folders were left behind by the app, it simply didn't even consider them as part of the job. Let me explain. Given a folder selected to be transferred (either for scatter or gather), the app creates a list of its first level children and later executes an rsync command for each one of them. If there are no children (the folder is empty), the app ignores the folder: there's no work to be done here. But, especially in the case of gather, where one would reasonably want the (empty) folder to be removed, this is not the correct behavior. It's an edge case that I hadn't considered and needs to be looked into. Let me show you how this applies to your case. Just for context (and based on the logs), you made 3 runs of the app, the first one successful, the other two interrupted by rsync's error 23. This is how it played out for some of the movies you were gathering. - Movies/13 Going on 30 I'll break it down by run (it wasn't included in the first run, so I'll start with the second, gather to disk5) You had files/folders in disk1, disk3, disk6 and disk11. Particularly disk11, had two 6 bytes folders, one of which caused the error 23. Second run I: 2017/10/24 19:51:30 core.go:656: getFolders:Readdir(9) I: 2017/10/24 19:51:30 core.go:666: getFolders:Executing find "/mnt/disk1/Movies/13 Going on 30/." ! -name . -prune -exec du -bs {} + I: 2017/10/24 19:51:30 core.go:669: getFolders:find(/mnt/disk1/Movies/13 Going on 30/.): 747502 /mnt/disk1/Movies/13 Going on 30/./13 Going on 30-fanart.jpg I: 2017/10/24 19:51:30 core.go:669: getFolders:find(/mnt/disk1/Movies/13 Going on 30/.): 168352 /mnt/disk1/Movies/13 Going on 30/./13 Going on 30.fanart.jpg I: 2017/10/24 19:51:30 core.go:669: getFolders:find(/mnt/disk1/Movies/13 Going on 30/.): 904917533 /mnt/disk1/Movies/13 Going on 30/./13 Going on 30.mp4 I: 2017/10/24 19:51:30 core.go:669: getFolders:find(/mnt/disk1/Movies/13 Going on 30/.): 170020 /mnt/disk1/Movies/13 Going on 30/./13 Going on 30.tbn I: 2017/10/24 19:51:30 core.go:669: getFolders:find(/mnt/disk1/Movies/13 Going on 30/.): 38400 /mnt/disk1/Movies/13 Going on 30/./Thumbs.db I: 2017/10/24 19:51:30 core.go:669: getFolders:find(/mnt/disk1/Movies/13 Going on 30/.): 120161 /mnt/disk1/Movies/13 Going on 30/./fanart.jpg I: 2017/10/24 19:51:30 core.go:669: getFolders:find(/mnt/disk1/Movies/13 Going on 30/.): 170020 /mnt/disk1/Movies/13 Going on 30/./folder.jpg I: 2017/10/24 19:51:30 core.go:669: getFolders:find(/mnt/disk1/Movies/13 Going on 30/.): 143184 /mnt/disk1/Movies/13 Going on 30/./movie.tbn I: 2017/10/24 19:51:30 core.go:669: getFolders:find(/mnt/disk1/Movies/13 Going on 30/.): 170020 /mnt/disk1/Movies/13 Going on 30/./poster.tbn I: 2017/10/24 19:51:31 core.go:656: getFolders:Readdir(8) I: 2017/10/24 19:51:31 core.go:666: getFolders:Executing find "/mnt/disk3/Movies/13 Going on 30/." ! -name . -prune -exec du -bs {} + I: 2017/10/24 19:51:31 core.go:669: getFolders:find(/mnt/disk3/Movies/13 Going on 30/.): 9374657 /mnt/disk3/Movies/13 Going on 30/./.actors I: 2017/10/24 19:51:31 core.go:669: getFolders:find(/mnt/disk3/Movies/13 Going on 30/.): 1346357 /mnt/disk3/Movies/13 Going on 30/./extrathumbs I: 2017/10/24 19:51:31 core.go:669: getFolders:find(/mnt/disk3/Movies/13 Going on 30/.): 287008 /mnt/disk3/Movies/13 Going on 30/./13 Going on 30-poster.jpg I: 2017/10/24 19:51:31 core.go:669: getFolders:find(/mnt/disk3/Movies/13 Going on 30/.): 83335 /mnt/disk3/Movies/13 Going on 30/./banner.jpg I: 2017/10/24 19:51:31 core.go:669: getFolders:find(/mnt/disk3/Movies/13 Going on 30/.): 355158 /mnt/disk3/Movies/13 Going on 30/./clearart.png I: 2017/10/24 19:51:31 core.go:669: getFolders:find(/mnt/disk3/Movies/13 Going on 30/.): 879830 /mnt/disk3/Movies/13 Going on 30/./disc.png I: 2017/10/24 19:51:31 core.go:669: getFolders:find(/mnt/disk3/Movies/13 Going on 30/.): 410197 /mnt/disk3/Movies/13 Going on 30/./landscape.jpg I: 2017/10/24 19:51:31 core.go:669: getFolders:find(/mnt/disk3/Movies/13 Going on 30/.): 38371 /mnt/disk3/Movies/13 Going on 30/./logo.png I: 2017/10/24 19:51:31 core.go:656: getFolders:Readdir(2) I: 2017/10/24 19:51:31 core.go:666: getFolders:Executing find "/mnt/disk6/Movies/13 Going on 30/." ! -name . -prune -exec du -bs {} + I: 2017/10/24 19:51:31 core.go:669: getFolders:find(/mnt/disk6/Movies/13 Going on 30/.): 5320 /mnt/disk6/Movies/13 Going on 30/./13 Going on 30.nfo I: 2017/10/24 19:51:31 core.go:669: getFolders:find(/mnt/disk6/Movies/13 Going on 30/.): 12451 /mnt/disk6/Movies/13 Going on 30/./movie.xml I: 2017/10/24 19:51:31 core.go:656: getFolders:Readdir(2) I: 2017/10/24 19:51:31 core.go:666: getFolders:Executing find "/mnt/disk11/Movies/13 Going on 30/." ! -name . -prune -exec du -bs {} + I: 2017/10/24 19:51:31 core.go:669: getFolders:find(/mnt/disk11/Movies/13 Going on 30/.): 6 /mnt/disk11/Movies/13 Going on 30/./.actors I: 2017/10/24 19:51:31 core.go:669: getFolders:find(/mnt/disk11/Movies/13 Going on 30/.): 6 /mnt/disk11/Movies/13 Going on 30/./extrathumbs The app did its thing, then was interrupted W: 2017/10/24 20:03:36 core.go:1016: Command Interrupted: rsync -avPRX "Movies/13 Going on 30/extrathumbs" "/mnt/disk5/" (exit status 23 : Partial transfer due to error) On the third run, you had this I: 2017/10/24 20:12:04 core.go:656: getFolders:Readdir(19) I: 2017/10/24 20:12:04 core.go:666: getFolders:Executing find "/mnt/disk5/Movies/13 Going on 30/." ! -name . -prune -exec du -bs {} + I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 9378446 /mnt/disk5/Movies/13 Going on 30/./.actors I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 747502 /mnt/disk5/Movies/13 Going on 30/./13 Going on 30-fanart.jpg I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 168352 /mnt/disk5/Movies/13 Going on 30/./13 Going on 30.fanart.jpg I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 904917533 /mnt/disk5/Movies/13 Going on 30/./13 Going on 30.mp4 I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 170020 /mnt/disk5/Movies/13 Going on 30/./13 Going on 30.tbn I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 38400 /mnt/disk5/Movies/13 Going on 30/./Thumbs.db I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 120161 /mnt/disk5/Movies/13 Going on 30/./fanart.jpg I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 170020 /mnt/disk5/Movies/13 Going on 30/./folder.jpg I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 143184 /mnt/disk5/Movies/13 Going on 30/./movie.tbn I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 170020 /mnt/disk5/Movies/13 Going on 30/./poster.tbn I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 1346357 /mnt/disk5/Movies/13 Going on 30/./extrathumbs I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 287008 /mnt/disk5/Movies/13 Going on 30/./13 Going on 30-poster.jpg I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 83335 /mnt/disk5/Movies/13 Going on 30/./banner.jpg I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 355158 /mnt/disk5/Movies/13 Going on 30/./clearart.png I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 879830 /mnt/disk5/Movies/13 Going on 30/./disc.png I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 410197 /mnt/disk5/Movies/13 Going on 30/./landscape.jpg I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 38371 /mnt/disk5/Movies/13 Going on 30/./logo.png I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 5320 /mnt/disk5/Movies/13 Going on 30/./13 Going on 30.nfo I: 2017/10/24 20:12:04 core.go:669: getFolders:find(/mnt/disk5/Movies/13 Going on 30/.): 12451 /mnt/disk5/Movies/13 Going on 30/./movie.xml I: 2017/10/24 20:12:05 core.go:656: getFolders:Readdir(0) I: 2017/10/24 20:12:05 core.go:659: getFolders:No subdirectories under /mnt/disk11/Movies/13 Going on 30 All files/folders were already gathered into disk5 (as a result of the second run), and disk11 was left with an empty folder, after the operation was interrupted. That's why the webgui shows the movie is still present in disk11 (as per the above explanation). I'm definitely not sure about the semantics of the rsync error 23 on that 6 byte folder, it's a bit strange, to be honest. Other examples: - Movies/2001 A Space Odyssey (1968) First Run (wasn't included) Second Run I: 2017/10/24 19:51:31 core.go:656: getFolders:Readdir(0) I: 2017/10/24 19:51:31 core.go:659: getFolders:No subdirectories under /mnt/disk11/Movies/2001 A Space Odyssey (1968) Third Run I: 2017/10/24 20:12:05 core.go:656: getFolders:Readdir(0) I: 2017/10/24 20:12:05 core.go:659: getFolders:No subdirectories under /mnt/disk11/Movies/2001 A Space Odyssey (1968) - Movies/13 Hours The Secret Soldiers of Benghazi (2016) First Run (wasn't included) Second Run I: 2017/10/24 19:51:31 core.go:656: getFolders:Readdir(0) I: 2017/10/24 19:51:31 core.go:659: getFolders:No subdirectories under /mnt/disk11/Movies/13 Hours The Secret Soldiers of Benghazi (2016) Third Run I: 2017/10/24 20:12:05 core.go:656: getFolders:Readdir(0) I: 2017/10/24 20:12:05 core.go:659: getFolders:No subdirectories under /mnt/disk11/Movies/13 Hours The Secret Soldiers of Benghazi (2016) and so on, similarly with the other folders (movies) you mentioned. I hope this explains what happened, the "don't do anything if it's empty" issue is something I'll get fixed.2 points

-

ok...I installed the Nerd Tools plug-in..then installed the kbd package and that did let me change the keyboard layout for the console successfully. I would still suggest that at least the kbd package should make its way into the core unRAID. Nothing nerdy about changing the keyboard layout! To make the setting persistent I had to edit the go file accordingly. I think if there was a selection field in the webGUI and the kbd package installed by default it would make things much easier. What is unfortunate though is that the otherwise awesome local webGUI doesn't care about the changed keyboard layout. Is there any way to fix that? Did I miss something? Thoughts? Cheers, Marcel2 points

-

As briefly requested in the latest 6.20 beta thread: Please add an option to select the keyboard language used in both the gui and the console boot modes. I am sure a variety of users would benefit from this as typing strong passwords in the gui currently requires an in-depth knowledge of where US-Keys are placed on one's native keyboard. This would resolve my difficulties in reaching the Unraid page from the gui mode, since my server is running on a non-standard port. Typing the colon currently is a real pain from my german layout.. I actually have to go to the Firefox settings page to copy and paste the http:// colon if I intend to use the web-gui. Any solution from simple go script changes to a real setting in the web-gui would suffice. This is not a request to translate the web-gui. Possible duplicate: https://lime-technology.com/forum/index.php?topic=40989.0 I have installed nerdpack with kbd-1.15.3-x86_64-2 remotely and will try adding to my go script as soon as I am home.1 point

-

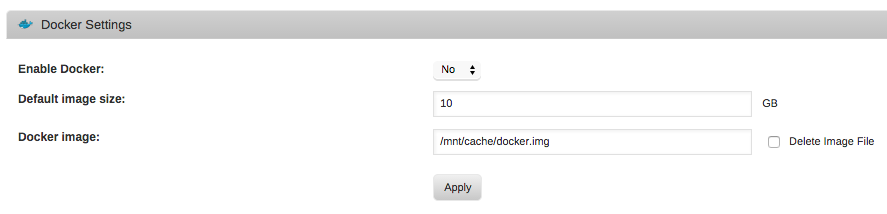

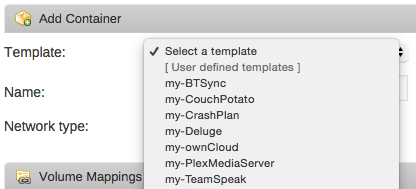

All, Should you wish to recreate your Docker virtual disk image from scratch, but retain your application data to avoid requiring reconfiguration for certain apps, the process is simple. Step 1: Delete your previous image file Login to your system from the unRAID webGui (http://tower or http://tower.local from Mac by default). Navigate to the Docker tab. Stop the Docker service if it is not already. Click the checkbox next to the Docker image that says Delete Image File. Click the Delete button after clicking the checkbox to delete the image (this may take some time depending on the size of your image). After the file has been deleted, you can simply re-enable the Docker service and the image will be created in the same storage location with the same name. Step 2: Redownloading your applications With the Docker service restarted, click Add Container. From the Template drop down, select one of your previously downloaded applications from the top of the list under User defined templates. If none of your volume mappings or port mappings have changed, you can click create immediately to start the download process. Repeat this process for each application you wish to re-download. Toggle the Autostart for each application after it downloads (only if desired). Step 3: There is no step 3... Seriously... What, you expected more steps? Nope! You're done!1 point

-

This is because IRQ16 used by the SAS2LP got disable, rebooting will fix it (but it may happen again).1 point

-

stop the docker first, then run this command as root (mostly your root anyway in unRAID console): chown -R nobody:users /dev/dvb afterwards restart the docker.1 point

-

In case you missed it, JonP posted in the version 6.4 pre-release support forum that Limetech is aware of the fix and plans to implement it in the next 6.4 beta release for testing purposes.1 point

-

The cache drive and caching writes to the array was introduced back when average write speeds directly to the array were much slower than they are nowadays. Many people (including myself) only use the cache drive for applications (appdata, and the downloads share). All writes to user shares go directly to the array. (That, and I find it impossible to justify to the "boss" why I need a larger cache drive when neither her nor myself see any real improvements from caching writes to the media shares) You still use the cache drive for downloads. But post processing / moving by Couch / Radarr / Sonarr will bypass the cache and go to the array. Now, if you cache drive isn't big enough to actually handle the size of the downloads, then try setting the download share to be use cache: Prefer. When an article doesn't fit on the cache drive, it will fallover to the array.1 point

-

Without parity there's nothing to check, array will star normally, there's a small chance one ore more disks will come up unmountable, but it's the same with parity in that regard.1 point

-

Haven't been around in a while, but just wanted to say this build is still running strong after almost 5 years. Other than the 1 Seagate drive that died and was replaced, have had no issues whatsoever.1 point

-

1 point

-

Hey everyone. I'm interested in some help porting Booksonic into a docker. This is a fork of subsonic but for audio books. I might have been able to do it if it were a deb file by using other subsonic containers and modifying them, but the Booksonic binaries are released as a .WAR file on the website. Thanks in advance for any help. Files can be found at http://booksonic.org/1 point

-

I moved to the lounge as this is less of a support issue and more a general NAS interest topic. On the topic, I have been an unRAID user for about 8 years, and have never gone looking for something else. A computer professional by trade, I enjoyed the level of customization that the platform offered, and felt that it was a good platform for backups and media files as you describe. At the time I bought I explored a number of options (most notably MS Server), but in the end unRAID turned out to be right for me and I can say with no reservations that the product has recently taken a quantum leap forward in terms of functionality and utility. As a little disclaimer, I do not work for LimeTech (none of the moderators do), nor have any financial interest in the company. I am just user that likes the product and agreed to help moderate the forum. The most important thing you need to know with unRAID (and maybe other NAS platforms as well), is that "set it and forget it" is all about good planning and being prepared. Components that might seem to be optional, like removable drive cages, good drives, and good cooling, pay off in the long run. And doing your homework so you understand how unRAID works and common solutions for typical problems is important! I'll give an example of a new user scenario that has played out a number of times. A user sets up their server with direct mounted drives, sata cables, power splitters, etc. in a computer case. With some tinkering the server comes up. They create the array, load most or all of their data, and then, a few weeks/months later, one of the drives is "red-balled" (meaning kicked from the array and simulated with the unRAID redundancy). The user assumes the red-ball means that the disk has failed. (Most commonly a red-ball is a loose cable, created by the owner tinkering inside the case, or a never-secured connection that jiggles loose and intermittently causes an error. The least common cause of a red-ball is a drive failure.) They then replace the drive, and with no drive cages, have to do surgery inside the case, often doing the irresistible cable routing optimization, and accidentally disturb other cables on other drives. They then try to rebuild using the other disks and half way through another drive red-balls. The user then panics and starts looking at using data recovery programs. Before you know it 2 or 3 missteps have created a mess requiring heroics from the community to help salvage data, which is usually at least partially successful, but nonetheless traumatic. This is so easily avoided. Plan your server. Buy drive cages if you expect to expand beyond a few drives. Read the BackBlaze reports and avoid the drives with high failure rates. Spend some time following the forum and reviewing the wki so you know what to do when a red-ball happens. The only Achilles heal I'd mention is the lack of (so called) dual parity. Dual parity would help in situations where two drives fail simultaneously (a very rare circumstance) or where a drive fails and while rebuilding, another drive throws an unexpected error. There is continuing debate for the timing of implementing this feature, which many of us are lobbying sooner than later. But in my 8 years here helping hundreds of users with all manner of failures, I've seen only a small handful of situations where this would have been needed in the hands of a knowledgeable user, but quite a few more, similar to the story above, where the dual parity might have helped a newbie that had shot themselves in the foot, recover more easily or completely. Without speaking negatively of SNAPraid, with which I have no experience, I can wholeheartedly recommend unRAID as a NAS platform. The most important features to me at the real-time protection provided, the fact that each drive contains an autonomous file system and is individually recoverable or moved from one server to another, the ability to easily replace failing or older drives with newer larger ones, and the user shares feature that enable multiple drives to appear as a single "share" to a media player or client machine. The recent Docker integration has allowed me to run some applications on my unRAID server that were previously running elsewhere. My plan for the future is to virtualize my Windows workstation further leveraging my unRAID server and meaning fewer machines being on 24x7. Good luck with your decision. I am sure others will share their insights.1 point